K8s Resume Challenge: Deploying to Kubernetes

Deploying the E-commerce website

For the initial MVP of deploying our application to Kubernetes, the following manifests were used.

Starting with the website Deployment spec, we set the environment variables accordingly.

apiVersion: apps/v1

kind: Deployment

metadata:

name: ecom-web

spec:

replicas: 2

selector:

matchLabels:

app: ecom-web

template:

metadata:

labels:

app: ecom-web

spec:

containers:

- name: ecom-web

image: ariyonaty/ecom-web:v1

ports:

- containerPort: 80

env:

- name: DB_HOST

value: ecom-db

- name: DB_USER

value: ecomuser

- name: DB_PASSWORD

value: ecompassword

- name: DB_NAME

value: ecomdb

Additionally, we define a Service to expose the application.

apiVersion: v1

kind: Service

metadata:

name: ecom-web

labels:

app: ecom-web

spec:

ports:

- port: 80

selector:

app: ecom-web

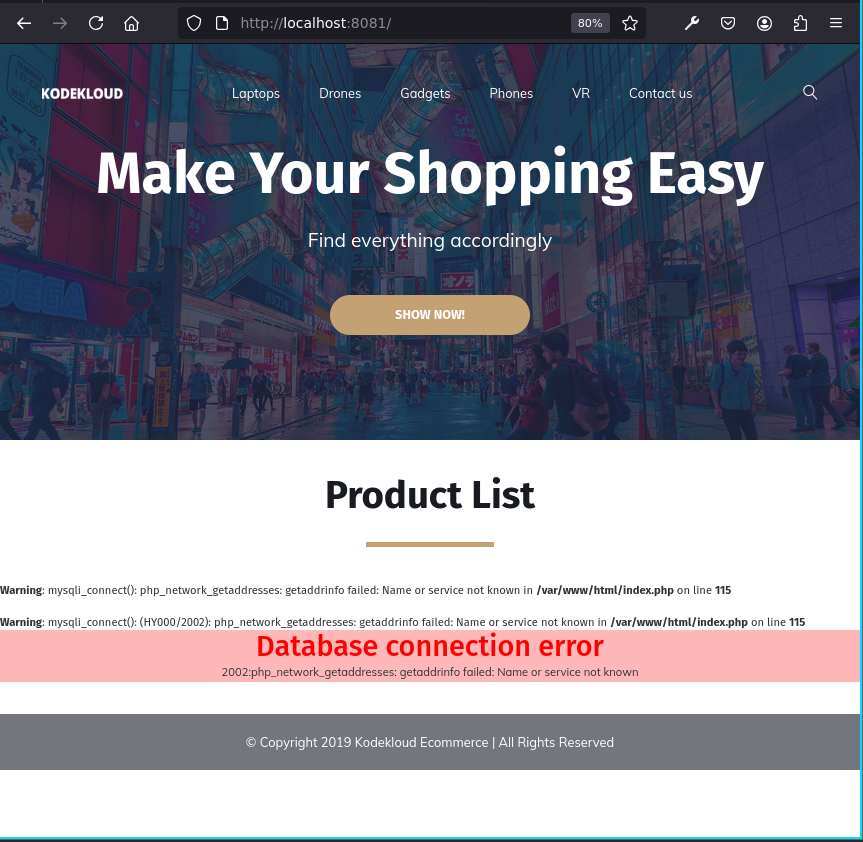

To quickly verify expected behavior, running a kubectl port-forward allows us to view the website locally.

kubectl --kubeconfig ~/.kube/talos-kubeconfig.yaml --insecure-skip-tls-verify port-forward services/ecom-web 8081:80

But wait - an error!

As shown above, our web app is unable to connect to our database. That makes sense though - we have yet to deploy the mariadb image to the Kubernetes cluster.

As shown above, our web app is unable to connect to our database. That makes sense though - we have yet to deploy the mariadb image to the Kubernetes cluster.

Let’s write that spec now. This will include several more resources.

Starting with the Service, we expose the MariaDB pod internal to the cluster. This also allows DNS lookups to the service name, ecom-db, like we specified in the ecom-web environment variable using DB_HOST.

---

apiVersion: v1

kind: Service

metadata:

name: ecom-db

labels:

app: ecom-db

spec:

ports:

- port: 3306

selector:

app: ecom-db

clusterIP: None

In the previous section, we defined the SQL initialization scripts for the database that were mounted as volumes via the Docker Compose into the container. This time, however, we define the data using ConfigMaps.

---

apiVersion: v1

kind: ConfigMap

metadata:

name: ecom-db-init-scripts

data:

1_configure.sql: |

CREATE USER 'ecomuser'@'%' IDENTIFIED BY 'ecompassword';

GRANT ALL PRIVILEGES ON *.* TO 'ecomuser'@'%';

FLUSH PRIVILEGES;

CREATE DATABASE ecomdb;

2_db_load_script.sql: |+

USE ecomdb;

CREATE TABLE products (id mediumint(8) unsigned NOT NULL auto_increment,Name varchar(255) default NULL,Price varchar(255) default NULL, ImageUrl varchar(255) default NULL,PRIMARY KEY (id)) AUTO_INCREMENT=1;

INSERT INTO products (Name,Price,ImageUrl) VALUES ("Laptop","100","c-1.png"),("Drone","200","c-2.png"),("VR","300","c-3.png"),("Tablet","50","c-5.png"),("Watch","90","c-6.png"),("Phone Covers","20","c-7.png"),("Phone","80","c-8.png"),("Laptop","150","c-4.png");

With the ConfigMaps defined, we can now reference them in the Deployment spec and mount it into the appropriate directory within the container/pod.

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ecom-db

labels:

app: ecom-db

spec:

selector:

matchLabels:

app: ecom-db

template:

metadata:

labels:

app: ecom-db

spec:

containers:

- image: mariadb

name: mariadb

volumeMounts:

- mountPath: /docker-entrypoint-initdb.d

name: ecom-db-cm-volume

env:

- name: MARIADB_ROOT_PASSWORD

value: ecompassword

ports:

- containerPort: 3306

name: mariadb

volumes:

- configMap:

name: ecom-db-init-scripts

name: ecom-db-cm-volume

Now after applying all the manifests, the website properly loads the data from the database. As an MVP, it works, but there’s certainly more work to do..