K8s Resume Challenge: Administration

In this post, we’ll tackle a collection of tasks that mirror a workflow in a professional environment – both on the dev side, as well as the ops side.

Agenda:

- Add dark mode

- Publish new version docker image

- Scale application

- Liveness/Readiness Probes

- ConfigMaps / Secrets

Dark Mode

Per the instructions, the dark mode toggle is to be determined based on value of environment variable FEATURE_DARK_MODE. As the focus of my learning is not web development (nor my area of expertise, if any :p), this feature was rudimentary implemented by creating a separate CSS stylesheet that includes the addition of a dark background color. Then, inline PHP was used to select which stylesheet to load. The below diff demonstrates this.

$ git diff

diff --git a/learning-app-ecommerce/index.php b/learning-app-ecommerce/index.php

index d2b214e..d558b13 100644

--- a/learning-app-ecommerce/index.php

+++ b/learning-app-ecommerce/index.php

@@ -20,7 +20,15 @@

<link rel="stylesheet" href="vendors/owl_carousel/owl.carousel.css">

<!-- Theme style CSS -->

- <link href="css/style.css" rel="stylesheet">

+<?php

+$darkMode = getenv('FEATURE_DARK_MODE') == 'true';

+

+if ($darkMode) {

+ echo '<link href="css/style-dark.css" rel="stylesheet">';

+} else {

+ echo '<link href="css/style.css" rel="stylesheet">';

+}

+?>

We rebuild the ecom-web Docker image, but this time under tag v2 and push it up to the registry.

To incorporate the feature into our production workload, we first create a ConfigMap to define the container environment variable with data.

apiVersion: v1

kind: ConfigMap

metadata:

name: feature-toggle-config

data:

FEATURE_DARK_MODE: "true"

Next, we update the website Deployment spec with both the updated image tag as well as the reference to the ConfigMap:

diff --git a/manifests/website-deployment.yaml b/manifests/website-deployment.yaml

index fc6e02d..8e2df27 100644

--- a/manifests/website-deployment.yaml

+++ b/manifests/website-deployment.yaml

@@ -14,7 +14,7 @@ spec:

spec:

containers:

- name: ecom-web

- image: ariyonaty/ecom-web:v1

+ image: ariyonaty/ecom-web:v2

ports:

- containerPort: 80

env:

@@ -26,3 +26,8 @@ spec:

value: ecompassword

- name: DB_NAME

value: ecomdb

+ - name: FEATURE_DARK_MODE

+ valueFrom:

+ configMapKeyRef:

+ name: feature-toggle-config

+ key: FEATURE_DARK_MODE

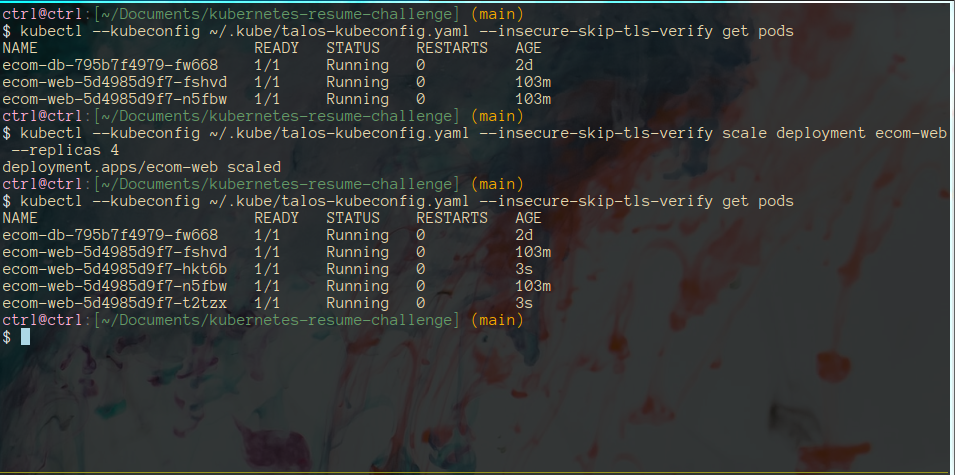

Scaling Application

With the new dark mode feature unleashed, we’re expecting a surge of traffic because users will no longer be blinded by the light theme. In order to sufficiently handle requests, let’s increase replica count of web app to handle the load.

The manual scaling works, but let’s set up automated scaling via Horizontal Pod Autoscaler (HPA) just in case:

kubectl --kubeconfig ~/.kube/talos-kubeconfig.yaml --insecure-skip-tls-verify autoscale deployment ecom-web --cpu-percent=50 --min=2 --max=10

This will ensure the Deployment scales appropriately when depending on the CPU percentage.

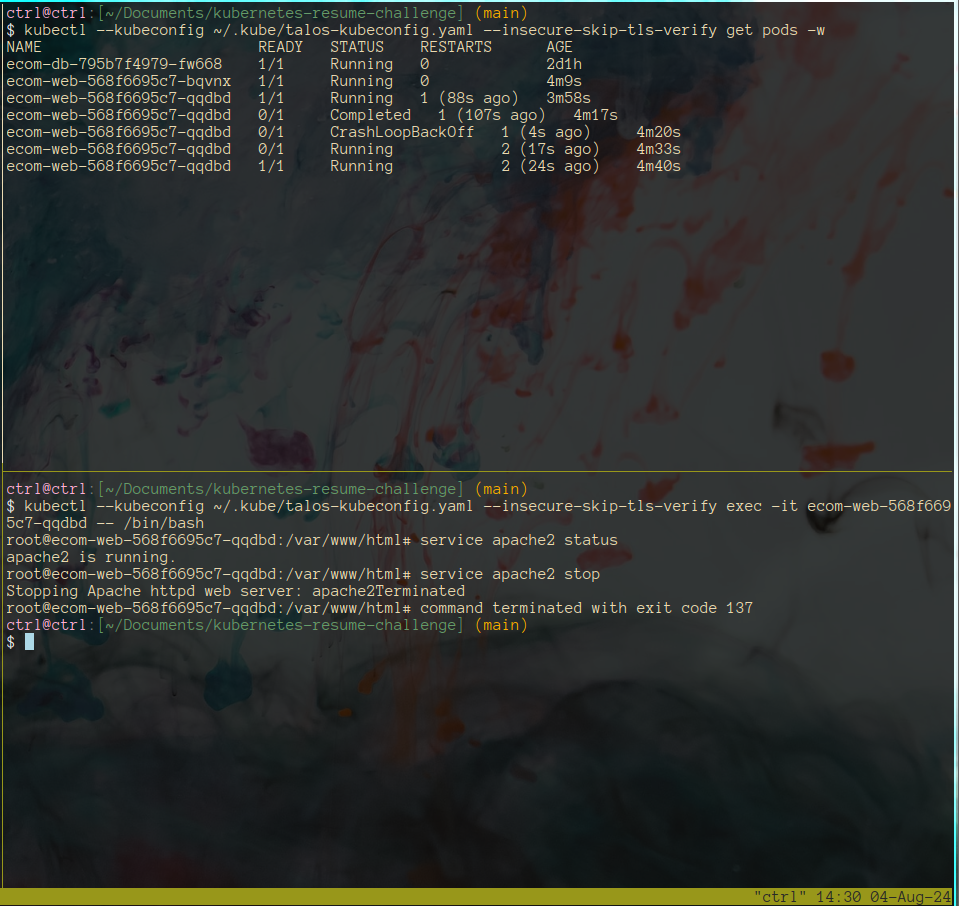

Liveness and Readiness Probes

While we can create dedicated paths for liveness and readiness probes, for the sake of simplicity, and demonstrating effectiveness of the health probes, we’ll use the same index.php for both.

diff --git a/manifests/website-deployment.yaml b/manifests/website-deployment.yaml

index 8e2df27..695412f 100644

--- a/manifests/website-deployment.yaml

+++ b/manifests/website-deployment.yaml

@@ -31,3 +31,15 @@ spec:

configMapKeyRef:

name: feature-toggle-config

key: FEATURE_DARK_MODE

+ livenessProbe:

+ httpGet:

+ path: /index.php

+ port: 80

+ initialDelaySeconds: 5

+ periodSeconds: 5

+ readinessProbe:

+ httpGet:

+ path: /index.php

+ port: 80

+ initialDelaySeconds: 5

+ periodSeconds: 5

After applying, we can verify effectiveness by exec’ing into one of the pods and killing the Apache service (which will make the HTTP GET request fail).

And before we know it, the application restarts and shortly after, reports a healthy status.

Kubernetes Secrets

Recall how we hard-coded into our website deployment spec the database connection variables. For obvious reasons, this doesn’t bode well with our fictitious cybersecurity team, so let’s get rid of it.

We start by creating a Kubernetes Secret:

apiVersion: v1

kind: Secret

metadata:

name: db-info

data:

hostname: ZWNvbS1kYg==

password: ZWNvbXBhc3N3b3Jk

username: ZWNvbXVzZXI=

dbname: ZWNvbWRi

(Pssst, it’s not actually a secret. Just base64 encoded. Don’t tell the Cyber team.)

Now, we can reference the secrets in our Deployment:

git diff a/manifests/website-deployment.yaml b/manifests/website-deployment.yaml

index 695412f..5208f2a 100644

--- a/manifests/website-deployment.yaml

+++ b/manifests/website-deployment.yaml

@@ -19,13 +19,25 @@ spec:

- containerPort: 80

env:

- name: DB_HOST

- value: ecom-db

+ valueFrom:

+ secretKeyRef:

+ name: db-info

+ key: hostname

- name: DB_USER

- value: ecomuser

+ valueFrom:

+ secretKeyRef:

+ name: db-info

+ key: username

- name: DB_PASSWORD

- value: ecompassword

+ valueFrom:

+ secretKeyRef:

+ name: db-info

+ key: password

- name: DB_NAME

- value: ecomdb

+ valueFrom:

+ secretKeyRef:

+ name: db-info

+ key: dbname

- name: FEATURE_DARK_MODE

valueFrom:

configMapKeyRef:

Reflecting on agenda:

- Add dark mode

- Publish new version docker image

- Scale application

- Liveness/Readiness Probes

- ConfigMaps / Secrets

Conclusion

While there was some extra credit and other paths I could have taken to expand the Kubernetes Resume Challenge, my goal of getting a bit of hands-on to refresh my experience has been met. Therefore, will call it quits here.

Thanks for following along and reach out if you have any feedback or ideas.