How To: Deploy an Internal Container Registry in Kubernetes

DigitalOcean Kubernetes Challenge

Hey there, reader!

About a week ago (12/22), I happened to catch a DigitalOcean stream on Twitch and learned of the Kubernetes Challenge they were hosting. Having just gone on break from school, I thought, “Hey, I’ve heard of this kubernoodles stuff before” and with no prior experience and about a week until the challenge due date, decided to spend my break to finally learn Kubernetes and participate in the challenge. Learn by doing, right?!

One of the challenges listed targeting beginners like myself was to Deploy an internal container registry. Kubernetes by default does not provide an internal container registry, however it is quite useful. This challenge peaked my interest as someone just starting to delve into the DevOps world and playing with Docker and containers more.

Just a note before continuing on with the challenge: My goal for this was to challenge myself and learn. That said, I am almost certain that there are things I am doing here that aren’t standard practice, possible security no-no’s, etc.. So if you have any tips or pointers, I’d love to learn more and feel free to reach out!

Cheers and happy holidays :)

Project

In the challenge, two of the container registries suggested included Harbor and Trow. While Harbor appeared to be a more complete and feature-rich solution, I decided to go with Trow for its advantages of being more lightweight and making it more suitable to be run inside an individual cluster.

The source code and a less-polished README can be found in my GitHub repo.

Alright, let’s jump in!

Infrastructure Provisioning

I initially was creating the Kubernetes cluster using the DigitalOcean dashboard, however wanting to commit to the DevOps practices, I decided to get some hands-on experience with Terraform at the same time. Terraform, an Infrastructure as Code software tool makes it more efficient to deploy, manage, and replicate resources without the overhead of dealing with the UI.

First, some prerequisites:

- terraform

- DigitalOcean token

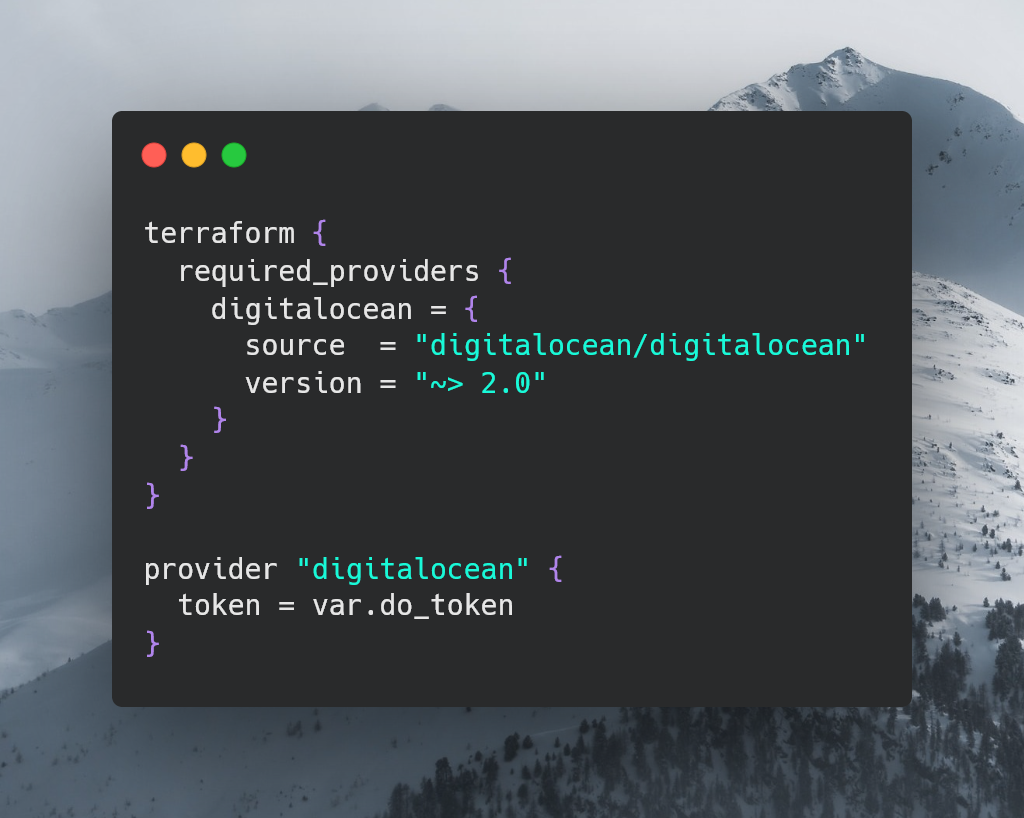

Create a file called provider.tf.

This tells Terraform that we want to use the DigitalOcean provider and appropriate variables.

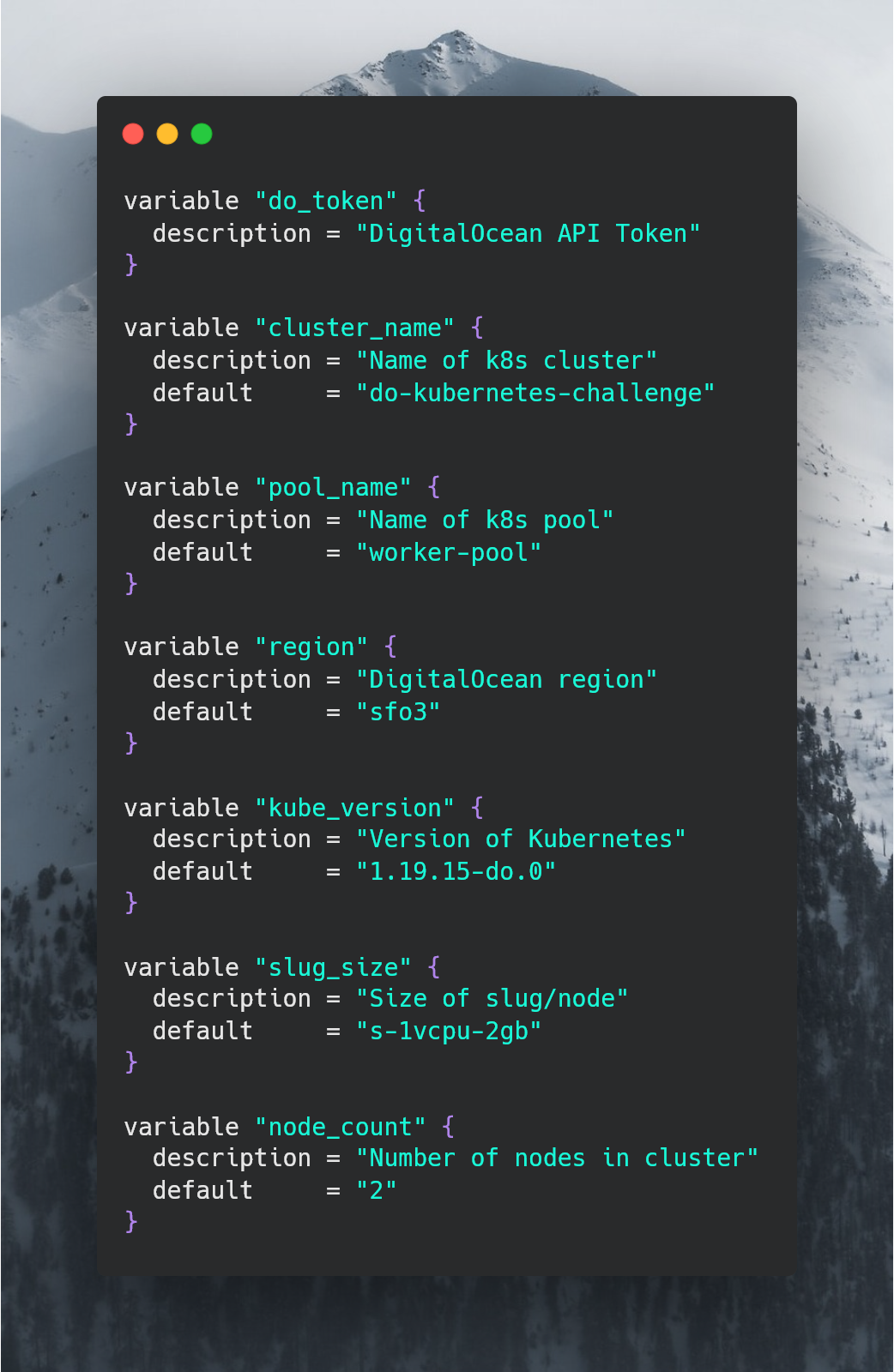

Next, lets create a terraform variables file to allow for a more streamlined approach to modify the infrastrucutre if necessary. We’ll call this variables.tf.

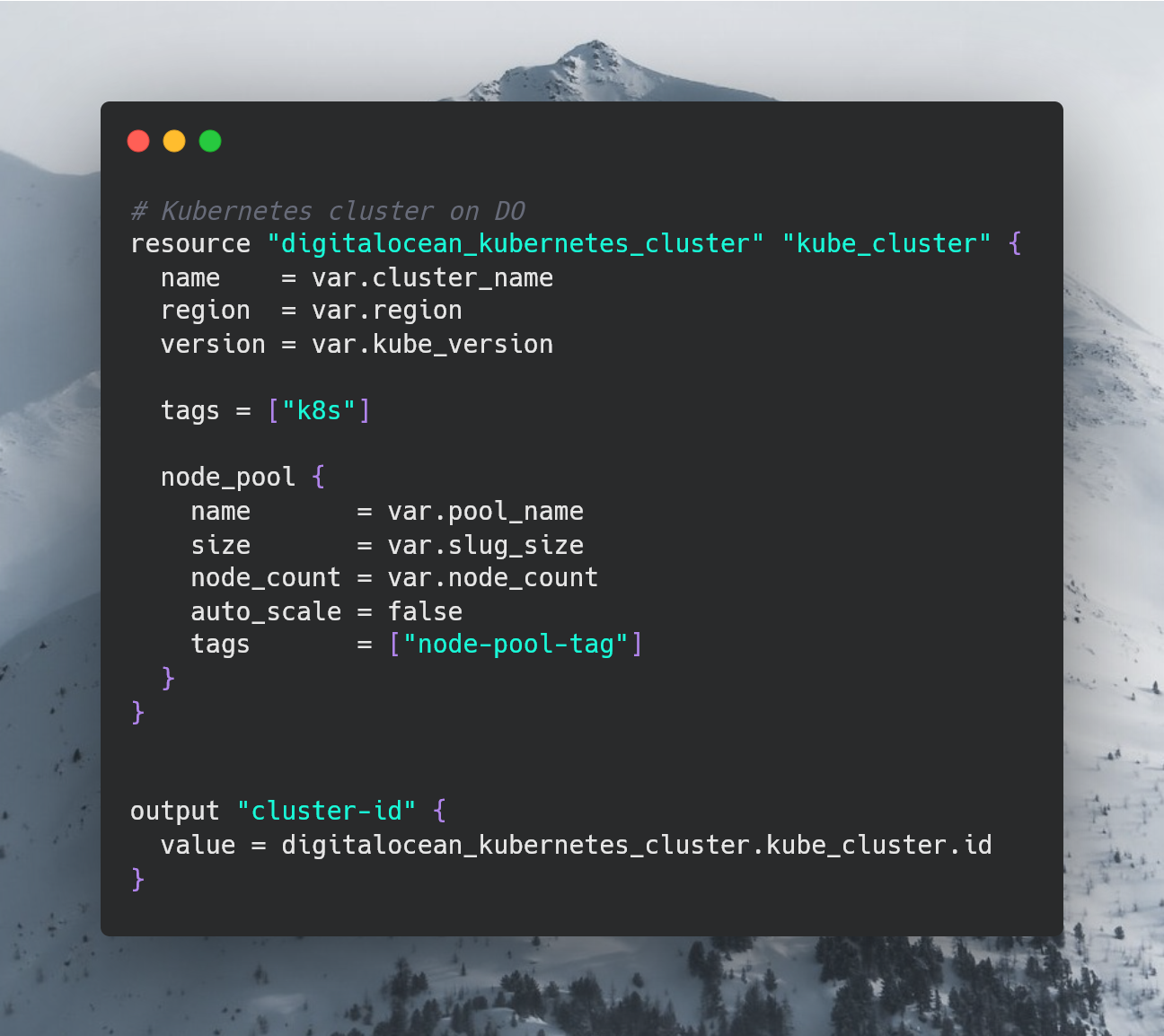

Finally, let’s get on with defining the kubernetes cluster. Creating a file called cluster.tf, we have:

One thing to note is that cluster.tf also defines an output, cluster-id, which will be used soon.

Alright, we have our terraform files, let’s get provisioning!

First start by exporting our DO token: export DIGITALOCEAN_TOKEN=<TOKEN>

Next, run terraform init in the directory with the *.tf files to initialize the project.

Validate the configuration with: terraform plan -var "do_token=${DIGITALOCEAN_TOKEN}"

Apply terraform plan with: terraform apply -var "do_token=${DIGITALOCEAN_TOKEN}"

After a bit of time, we should see the infrastructure provisioned and the cluster-id output. Copy the ID and run the helper script to fetch the kubeconfig for the deployed cluster. The helper script simply downloads the kubeconfig file and sets it to the KUBECONFIG environment variable for future use with kubectl.

Running the helper script: source helpers/fetch_config.sh <CLUSTER-ID>

This concludes the inital infrastructure provisioning with Terraform.

Deploying Trow

Initially, the plan was to install trow using helm, however as will be noted later in this post, certain challenges arose and trow’s quick-install script was used.

Clone the trow repo found here Proceed with:

cd trow/quick-install/

./install.sh # Select 'y' for all prompts.

And just like that, trow is installed inside our cluster!

At this point, we’re able to push images. For the sake of the demonstration, I created a simple python flask app that prints the name of the host its running on. Containerizing and tagging (see app/ in github repo):

docker tag flask_demo:0.0.1 trow.kube-public:31000/flask_demo:0.0.1

docker push trow.kube-public:31000/flask_demo:0.0.1

Demo

Great, at this point, our image is (hopefully) deployed to the Trow container registry inside our Kubernetes cluster. But how can we use it?

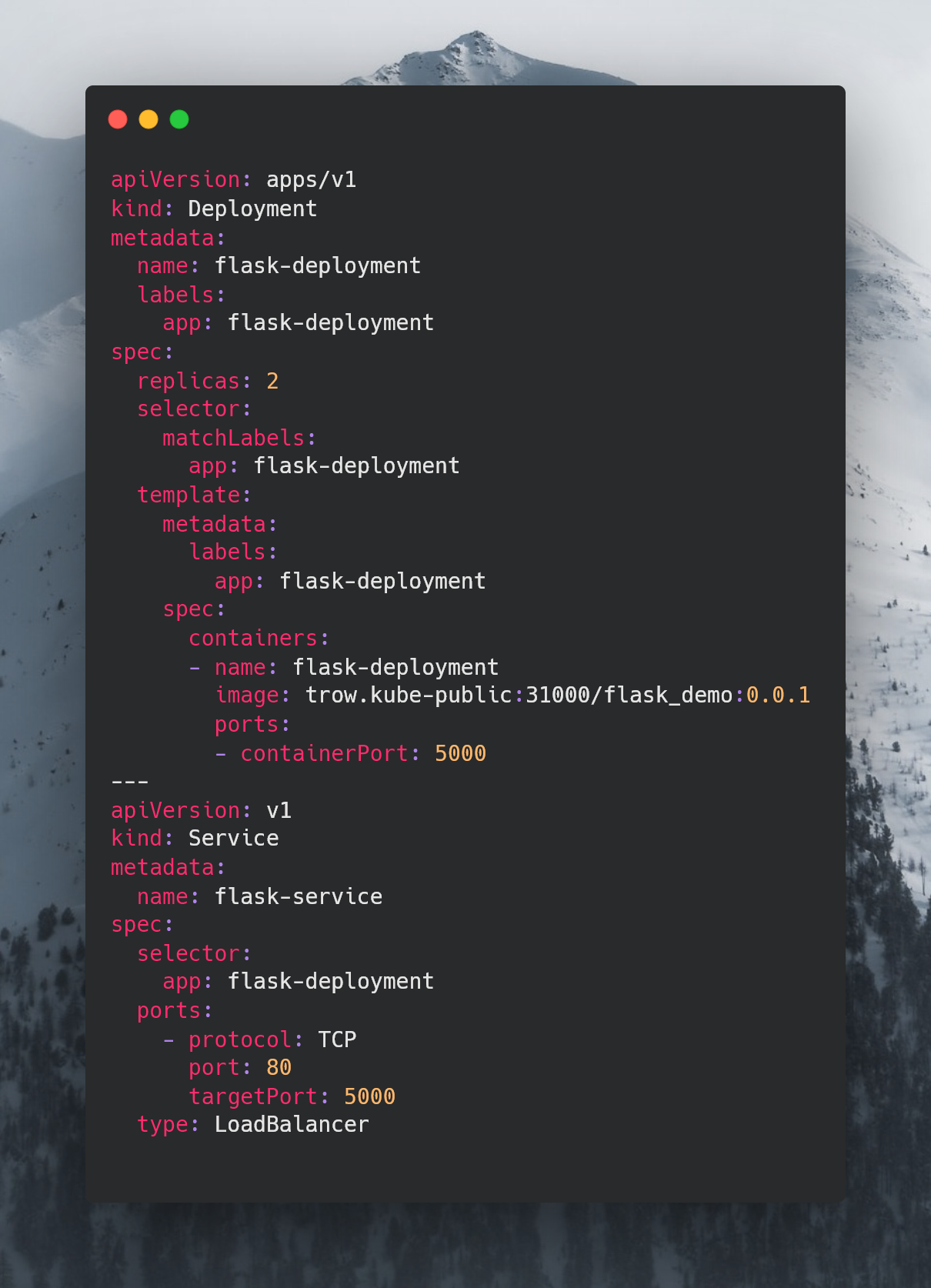

Let’s create a simple deployment:

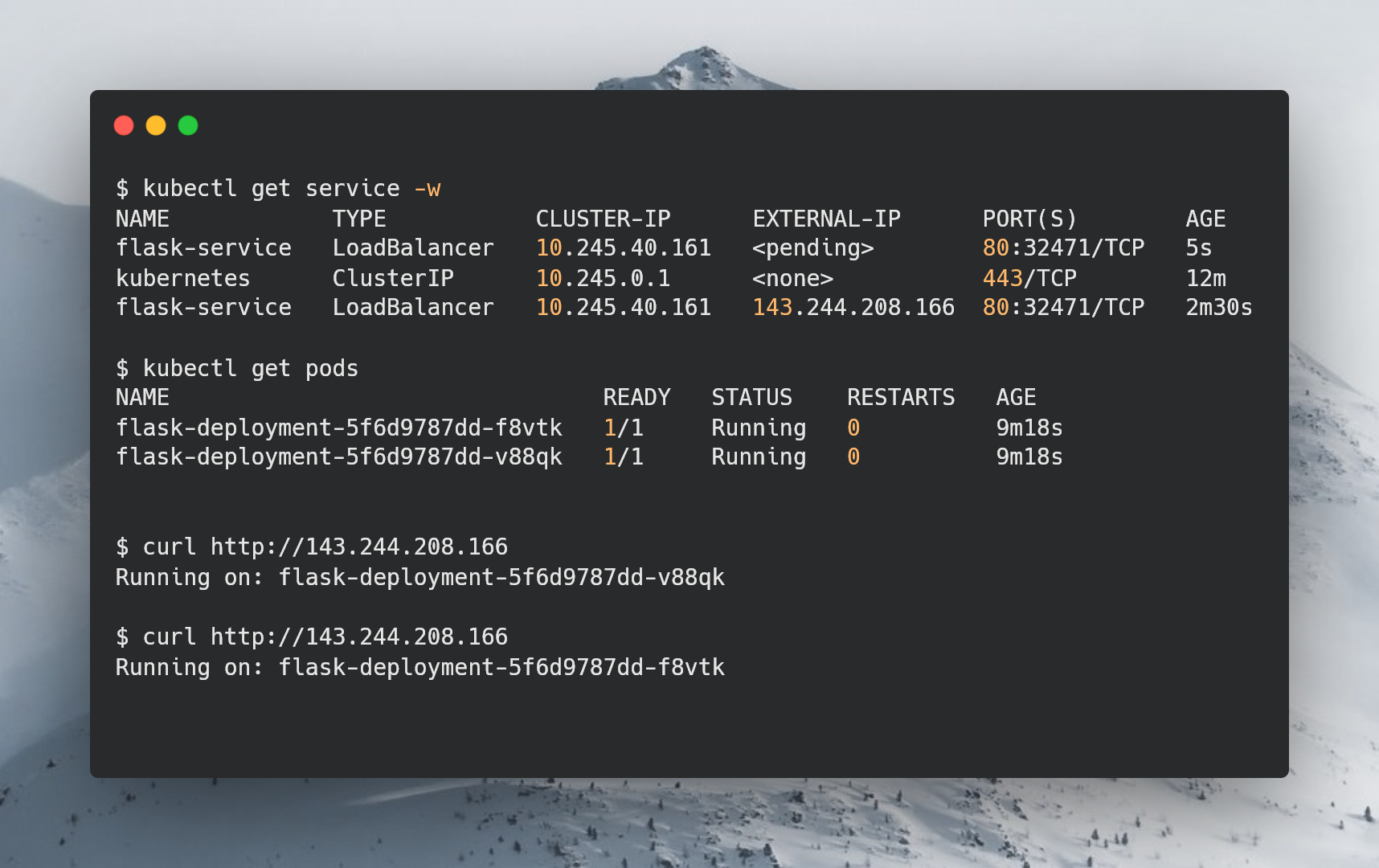

In our deployment.yaml, we define our deployment and a load balancer. Note the the ‘image’ points to the pushed flask_demo app in trow. Our deployment is set to deploy onto two pods, so we can expect that visiting the IP of the load balancer to alternate between hosts.

Run the deployment and service: kubectl create -f deployment.yaml

Wait for the IP of the load balancer to show: kubectl get service -w

Once an IP is assigned, you can visit http://<IP> and refreshing a couple of times should show the load balancer in effect.

Just like that, we’ve used Terrafrom to provision a Kubernetes cluster on DigitalOcean. Next, we install Trow, an internal container registry in our cluster, and finally, we were able to deploy our flask demo, using the image in Trow.

Wait! Can’t forget to get rid of the resources if not being used.. right? To delete and destroy the resources, run:

kubectl delete -f deployment.yaml

terraform destroy -var "do_token=${DIGITALOCEAN_TOKEN}"

And bam! It’s gone!

Conclusion

I hope you enjoyed reading this writeup here. I enjoyed the challenge (oh, so many challenges; see below) and the learning opportunity it provided. Thanks DigitalOcean! And hey, if at some point in the future you’re inclined to do this yourself, here’s a referral which gets you $100 worth of credit on DigitalOcean. You can basically run all of this for free for at least a month!

Challenges

Many challenges arose as I worked through this, uhhh, challenge.

Initially, I didn’t use Terraform and attempted everything manually. I noticed that I had defaulted to a Kubernetes version 1.21.X. When getting to Trow, it kepts on erroring out. I was able to push and pull images, however kubectl wasn’t and kept resulting in x509 certificate error. Only after 2 days or so of hair-pulling, I stumbled on the issue that the containerd runtime, default for Kubernetes versions following 1.20.X, is not supported by the trow installer. Hence, I had to revert to version 1.19.X for it to work.

After switching to Terraform, I started to install Trow using

helm. I noticed, however, that volumes appeared to be created which weren’t tracked by Terraform. Without wanting to spend too much time debugging and jumping down a rabbit hole, I opted out of the helm installation.When creating the loadbalancer kubernetes service, that too, is not tracked by Terraform. I thought that the resource

digitalocean_loadbalancerin terraform would work to provision the loadbalancer with the rest of hte infrastructure, however encounted issues which I believe to be something along the lines of a regular load balancer vs kubernetes load balancer and would require thekubernetesprovider. To be continued…

Future Improvements

- Include terraform resource for domain and DNS record

- Include kubernetes deployment and service as part of terraform